Background

The UNIFYBroker-based Active Directory provisioning model uses a Powershell connector in Broker to action “once-off” operations for an AD user account, such as:

- Account creation

- Account deletion

- Mailbox enable/disable

The Broker Powershell connector has the following configuration components:

- “Import All” – e.g. pre-load all existing users from AD

- “Import Changes” – e.g. identity and load newly created users from AD, and remove any deleted users from AD

- “Add Entities” – called when a new user record is created in MIM, to trigger and perform creation of the required AD account

- “Update Entities” – called when user attributes are updated in MIM, to trigger any required actions (such as enable/disabling an exchange mailbox)

- “Delete Entities” – called when a user record is deleted in MIM, to trigger and perform deletion of the corresponding AD account

The Powershell connector can also be used for AD objects other than users.

[CUSTOMERX] Solution

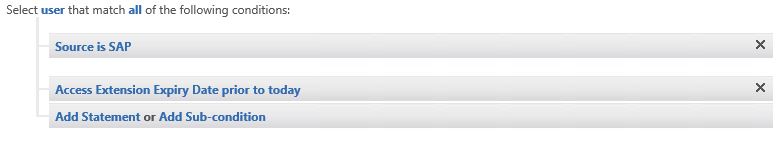

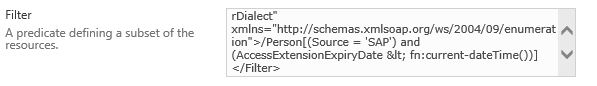

A key element of the user lifecycle at [CUSTOMERX] is the “Identity State” of each user, which is derived from various user attributes (e.g. HR contract start date, manual disable flag, user last login timestamp, etc) and drives the values of various other attributes (e.g. AD Distinguished Name, account disable/enable state, exchange mailbox enable/disable, etc). There are similar attributes (“Group State” and “Contact State”) for AD groups and AD mail contacts.

The following documents the specific actions performed by Broker connectors:

AD User connector

- Import All: load all user objects from AD (Get-ADUser command)

- Import Changes: identity new and deleted user objects in AD (Get-ADObject command)

- Add Entities: create AD user account (New-ADUser command)

- Update Entities: depending on the Identity State attribute (from MIM), enable or disable the user’s exchange mailbox (enable-mailbox and disable-mailbox commands via a remote PSSession to the Exchange server)

- Delete Entities: delete AD user account (Remove-ADObject command)

AD Group connector

- Import All: load all group objects from AD (Get-ADGroup command)

- Import Changes: identity new and deleted group objects in AD (Get-ADObject command)

- Add Entities: create AD group object (New-ADGroup command)

- Update Entities: depending on the Group State attribute (from MIM), hide or show the group’s address in the GAL (Set-DistributionGroup command via a remote PSSession to the Exchange server)

- Delete Entities: delete AD group object (Remove-ADObject command)

AD Contact connector

- Import All: load all contact objects from AD (Get-ADObject command)

- Import Changes: identity new and deleted contact objects in AD (Get-ADObject command)

- Add Entities: create AD contact object (New-ADObject command)

- Update Entities: depending on the Contact State attribute (from MIM), mail enable the contact object (Set-MailContact command via a remote PSSession to the Exchange server)

- Delete Entities: delete AD contact object (Remove-ADObject command)

These three Broker connectors each have a corresponding Broker adapter, but share one “Provisioning” MA within MIM. Please refer to the Provisioning MA configuration and the Powershell connector implementation for important aspects of the solution (e.g. required minimum attributes that must be exported from MIM for use when creating the various AD objects).

Issues Encountered and Key Learnings

The following important lessons were learned during implementation.

“Import All” cannot be treated as a periodic baseline as per our normal MIM best practices, because when run it resets (nulls) all connector attribute values other than those it sets directly. This means that the next MIM export operation (after a MIM full import/sync to identify that the attributes have been cleared – and not a delta import/sync as this does not notify MIM of the changes) will have to restore all export attribute values (i.e. Identity/Group/Contact State, in the [CUSTOMERX] case) and this will re-trigger any “Update Entities” code for all objects (i.e. attempt to re-enable or re-disable every user’s exchange mailbox, in the [CUSTOMERX] case).

“Import Changes” functionality must be implemented and cannot be set to “None”. Following on from the previous paragraph, because regular baselining of the connector is problematical and best avoided an incremental synchronisation method is needed to keep the connector in sync with the source AD data. If this is omitted then objects that are created directly in AD by other systems will not be visible in the connector (and consequently won’t be created in the MIM “Provisioning” MA, leading to failed provisioning requests from MIM when the attempt is made to create the already-existing AD object).

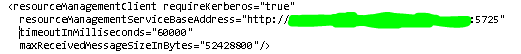

The default timeout for MIM export profile runs to ECMA2 connectors such as Broker is 60 seconds, and the default batch size is 5,000 objects. At [CUSTOMERX], Broker was unable to process requests from MIM fast enough, leading to timeouts which manifest in MIM as “cd-error” errors on each exported object with no other useful information logged anywhere. Therefore it is highly recommended that the timeout be significantly increased. It may also be effective to decrease the batch size, but this approach was not tested and is therefore unconfirmed.

Errors during export (e.g. problems during the development of the Broker Powershell connector code, or transient errors when executing commands from the Broker Powershell connector code) cause MIM to report errors against exported objects, even though Broker may actually correctly save the updated value of that attribute. If a subsequent export attempt is made then Broker returns an “Other” error on the second export attempt, with error default “Internal error #9 (Cannot add value)”. Debugging this situation and identifying what is causing it to occur can be difficult, since it only occurs on a second export after a previous error has been encountered, for a different reason. Consequently it is recommended that all Broker powershell “Update Entities” code must include error-checking and be wrapped in exceptions handling and use the Failed Operations mechanism (“$components.Failures.Push($entity)”) to correctly record operation failures. Refer to the Broker Powershell connector documentation for details of this mechanism. It is arguably a bug in Broker that it fails to correctly handle the second export’s attribute update.

When using PSSessions in the Powershell connector to perform operations remotely, be sure to call Remove-PSSession to free the session as soon as it is no longer required. Failure to do so may contribute to Broker misbehaviour, although this is unconfirmed.

Quick Guide to Common MIM Export Errors

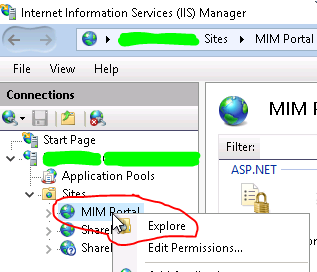

“cd-error” with no other details – a timeout from the MIM side. Increase the export profile’s timeout (“Operation Timeout (s)” on the third screen of the Run Profile wizard – NOT the Custom Data “Timeout (seconds)” on the second)

“Other” Internal error #9 (Cannot add value) – a previous export failed and Broker updated the attribute but reported the error back to MIM, which retries the export and confuses Broker which has already saved a value to this attribute.

If you are having other errors during export, remove your powershell code altogether and leave just a empty stub in place that does nothing (not even load a blank external script). Try running your entire export (full data set) and make sure it works correctly first. Then try some delta exports (just a few records) and make sure they work. Once you’re sure you have that mechanism working correctly, but your code back in gradually – add functionality bit by bit, making sure you do both the entire export (full data set) and the the delta export (just a few records) each time.